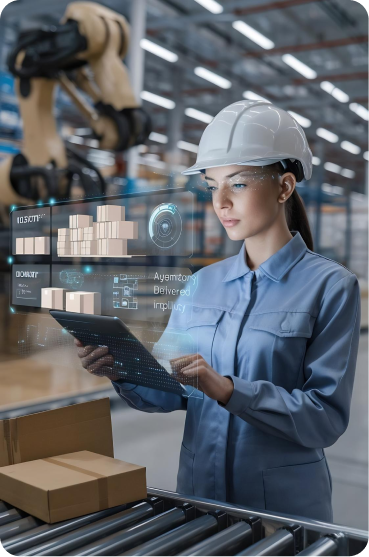

Data intelligence for how manufacturing supply chains actually operate

When materials, machines, or plans fall out of sync, the impact shows up immediately in scrap, downtime, and missed output. This accelerator helps manufacturers see execution risk earlier, plan with confidence, and protect cost per unit when variability hits.

Data intelligence, enabling manufacturing supply chain performance

Manufacturing performance is ultimately measured in unit cost, throughput, and uptime. Supply chain intelligence must connect materials, production plans, and execution signals to improve these outcomes directly.

12% lower per-unit production costs

70% faster planning cycles

40% less unplanned downtime

8-20% higher first-pass yield

Customer story

AFG Tech brought together fragmented operational data into a single, governed foundation, creating the conditions required for logistics reporting, execution visibility, and downstream analytics. Rather than spending months building custom pipelines, the team focused on establishing a scalable data foundation.

To deploy data lakehouse

Operational workflows

What you can achieve

Real execution scenarios where material flow, planning decisions, and shop floor realities determine manufacturing performance.

Material and production teams see execution issues sooner, allowing corrective action before defects, rework, or excess scrap accumulate.

Planning teams align schedules with real material availability and capacity, reducing last-minute changes and missed commitments.

Execution risks are surfaced early, helping teams prevent stoppages instead of reacting once machines or lines are already down.

Better coordination between supply, planning, and execution recovers lost capacity without adding machines or labor.

Production, supply chain, and maintenance teams work from one shared view, accelerating decisions during daily execution and escalations.

Turn manufacturing complexity into execution control

Integrates with the manufacturing systems you already run

Manufacturing supply chains rely on ERP, MES, planning, and supplier systems. This accelerator connects to those systems directly and unifies operational signals without replacing what already works.

Frequently asked questions

Because the data foundation, ingestion pipelines, and operational models are pre built, businesses can go live in as little as two weeks, not months. This means reporting latency drops almost immediately, manual reconciliation effort is reduced, and teams start making faster, more confident decisions within the first few weeks, with measurable operational and financial impact often visible in the first quarter. Book a data discovery workshop to confirm scope, systems, and go live timeline. Book a data discovery workshop to confirm scope, systems, and go live timeline.

Operational data is ingested continuously or at high frequency from execution systems, allowing dashboards, alerts, and exception views to reflect current conditions rather than yesterday’s data. This enables teams to identify risks, bottlenecks, and anomalies early, intervene sooner, and avoid costly last minute recovery actions that impact service levels and margins. Book a demo to see near real time decision workflows in action. Book a demo to see near real time decision workflows in action.

Operational data is ingested continuously or at high frequency from execution systems, allowing dashboards, alerts, and exception views to reflect current conditions rather than yesterday’s data. This enables teams to identify risks, bottlenecks, and anomalies early, intervene sooner, and avoid costly last minute recovery actions that impact service levels and margins. Book a demo to see near real time decision workflows in action.

Traditional BI relies on delayed extracts, manual transformations, and static dashboards that explain what already happened. This accelerator focuses on the data foundation itself, ensuring data is timely, consistent, and context aware before it reaches analytics. The result is execution level insight that supports decisions while there is still time to act, not after outcomes are locked in. Book a demo to compare execution level intelligence with traditional reporting.

Pre built data models, automation, and natural language querying allow business users to access insights without writing SQL, building pipelines, or waiting for custom dashboards. This shifts analytics from a centralized bottleneck to a shared capability, reducing reporting backlogs and allowing lean teams to operate with enterprise scale insight. Calculate ROI to estimate savings from reduced engineering effort and faster decision cycles.

Built by the “AWS Rising Star Partner of the Year”

LakeStack is built by the team at Applify, a team that has spent more than a decade designing, deploying, and operating complex data platforms on AWS for enterprises across industries.

- 12+ years building production systems on AWS

- 100+ AWS certifications across the team

- 6 AWS Competencies and 9 AWS Service Validations

- 500+ SMBs served across the globe